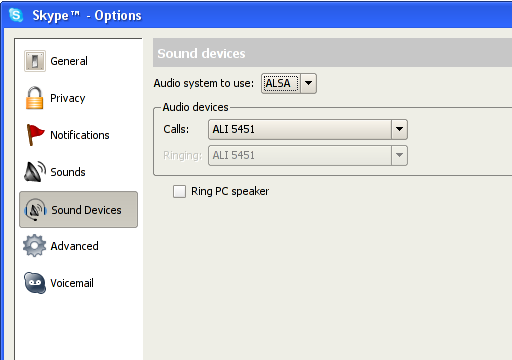

Earlier today, I saw some spikes on the load graph for the new server (where this site is hosted).

Upon checking the logs I saw a lot of these:

134.122.53.221 - - [01/May/2020:12:21:54 +0000] "POST //xmlrpc.php HTTP/1.1" 200 264 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36" 134.122.53.221 - - [01/May/2020:12:21:55 +0000] "POST //xmlrpc.php HTTP/1.1" 200 264 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36" |

198.98.183.150 - - [01/May/2020:13:44:24 +0000] "POST //xmlrpc.php HTTP/1.1" 200 265 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36" 198.98.183.150 - - [01/May/2020:13:44:25 +0000] "POST //xmlrpc.php HTTP/1.1" 200 265 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.149 Safari/537.36" |

I’m not mentioning the source IP owner, technical readers can look it up if they are interested. However, the second IP comes from a IP range that is rather interesting.

Searching the Internet, I found out that many people consider the xmlrpc.php as a problem. For those who are not familiar with WordPress, this file is responsible for external communications. For example when using the mobile application to manage your site, and also when you use Jetpack.

There are plugins to disable XML-RPC such as this one, but I use the app from time to time, so I would like to keep xmlrpc.php working.

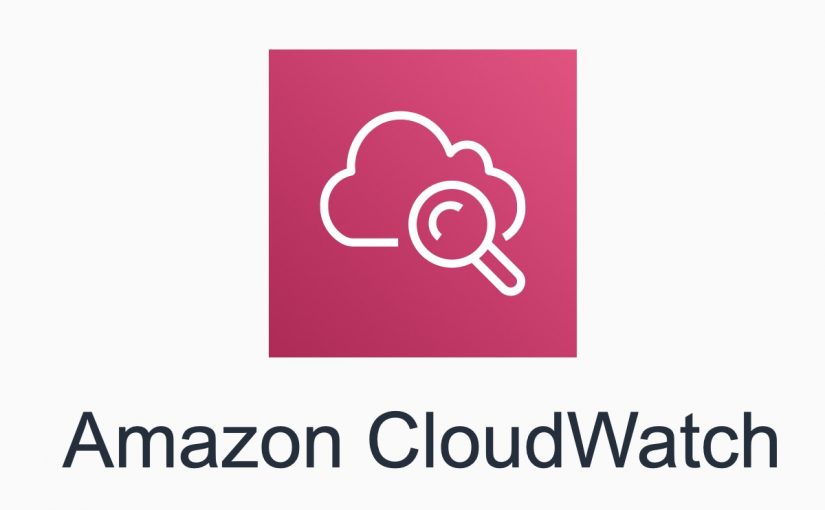

The official Jetpack website provides this list for whitelisting purposes.

I have been restricting access to my /wp-admin URL for ages, using Nginx. I think it is a good idea to do the same for xmlrpc.php.

location ~ ^/(xmlrpc\.php$) { include conf.d/includes/jetpack-ipvs-v4.conf; deny all; include fastcgi.conf; fastcgi_intercept_errors on; fastcgi_pass php; } |

The simple script to update this IP list into Nginx configuration, that is consumed by the configuration above:

#!/bin/bash FILENAME=jetpack-ipvs-v4.conf CONF_FILE=/etc/nginx/conf.d/includes/${FILENAME} wget -q -O /tmp/ips-v4.txt https://jetpack.com/ips-v4.txt if [ -s /tmp/ips-v4.txt ]; then cat /tmp/ips-v4.txt | awk {'print "allow "$1";"'} > /tmp/${FILENAME} [ -s ${CONF_FILE} ] || touch ${CONF_FILE} if [ "$(diff /tmp/${FILENAME} ${CONF_FILE})" != "" ]; then echo "Files different, replacing ${CONF_FILE} and reloading nginx" mv -fv /tmp/${FILENAME} ${CONF_FILE} systemctl reload nginx else echo "File /tmp/${FILENAME} match ${CONF_FILE}, not doing anything" fi fi rm -f /tmp/ips-v4.txt |

It can be periodically executed by cron so that when the IP list changes, the configuration gets updated.

Now, if any IP other than Jetpack tries to access /xmlrpc.php it will receive Error 403 Forbidden.

Have fun!

The current “stable†distribution of Debian GNU/Linux is version 4.0r0, codenamed etch. It was released on April 8th, 2007.

The current “stable†distribution of Debian GNU/Linux is version 4.0r0, codenamed etch. It was released on April 8th, 2007.