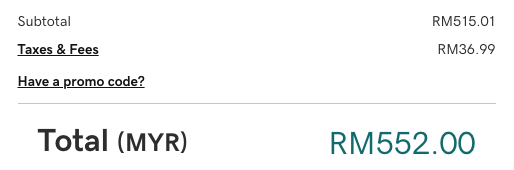

GKE version: 1.20.12-gke.1500

Helm Chart version: 1.8.0

In pursuit of Elasticsearch knowledge, I attempted to install OpenSearch on a GKE cluster. Following the instructions in https://opensearch.org/docs/latest/opensearch/install/helm/ the pods crashed.

NAME READY STATUS RESTARTS AGE

opensearch-cluster-master-1 0/1 CrashLoopBackOff 1 13s

opensearch-cluster-master-0 0/1 CrashLoopBackOff 1 13s

Looking at the logs from the pod:

[1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

Looking at values.yml I tried to add:

sysctl: enabled: false sysctlVmMaxMapCount: 262144 |

But I was greeted with the following when I described the pods:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning SysctlForbidden 1s kubelet forbidden sysctl: "vm.max_map_count" not whitelisted

Since I am using terraform, I looked at the documentation and found that the vm.max_map_count parameter is not supported by “linux_node_config parameter” in the “google_container_cluster” resource.

Searching through the Internet, I found this excellent post: A Guide to Deploy Elasticsearch Cluster on Google Kubernetes Engine but my init container still failed, it wasn’t able to change the vm.max_map_count value.

sysctl: error setting key 'vm.max_map_count': Permission denied

So I tried adding “runAsUser: 0” so that it runs as root and it worked. Final block in values.yml for my init containers (specifically to deal with the sysctl issue) is below:

extraInitContainers: - name: increase-the-vm-max-map-count image: busybox command: - sysctl - -w - vm.max_map_count=262144 securityContext: privileged: true runAsUser: 0 - name: increase-the-ulimit image: busybox command: - sh - -c - ulimit -n 65536 securityContext: privileged: true runAsUser: 0 |

helm upgrade opensearch -n opensearch -f values.yml opensearch/opensearch --version 1.8.0

All is well

NAME READY STATUS RESTARTS AGE

opensearch-cluster-master-0 1/1 Running 0 12m

opensearch-cluster-master-1 1/1 Running 0 12m

opensearch-cluster-master-2 1/1 Running 0 12m

![[SOLD] Frame LCD Display Touch Digitizer Screen for LG Google Nexus 5 D820 D821](https://blog.adyromantika.com/wp-content/uploads/2014/07/Frame-LCD-Display-Touch-Digitizer-Screen-for-LG-Google-Nexus-5-D820-D821.jpg)