Since I moved to a new home I didn’t let my Linux servers run 24/7 so that I can save on electricity bills. It’s not so much about moving homes but it’s about the tariff increase. My 500W and 400W power supplies can easily reach the max usage of around 300W each which translates to around 432kWh per month, costing me around RM123.55 (source: TNB Tariff). Money that can be better spent for my gadgets, craft items for my wife, or toys for my kid.

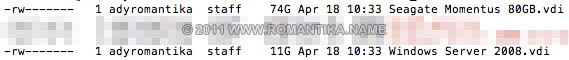

The last time my Subversion repositories were used, was in Feb 2009. Since the repositories are just files, I can easily transfer them to my Mac to use locally. For me, file history and ability to revert changes are more important that using the repository as backup. My Mac is backed up using Time Machine anyway so I am pretty much safe. I am obsessed with file history and proper branching in source code, sometimes I think I have a mild version of OCD. Seriously.

OK, now on to the technical part. I am unsure on how my machine is pre-installed with CollabNet’s version, but you can install it on your Mac using one of the available packages listed in Apache’s official Subversion packages list.

Tested on: Snow Leopard 10.6.6

Known to work as early as: Tiger 10.4.x

My version:

svn, version 1.6.5 (r38866)

compiled Jun 24 2010, 17:16:45

Copyright (C) 2000-2009 CollabNet.

Subversion is open source software, see http://subversion.tigris.org/

This product includes software developed by CollabNet (http://www.Collab.Net/). |

svn, version 1.6.5 (r38866)

compiled Jun 24 2010, 17:16:45

Copyright (C) 2000-2009 CollabNet.

Subversion is open source software, see http://subversion.tigris.org/

This product includes software developed by CollabNet (http://www.Collab.Net/).

Please take note that the method I am going to show here is to allow access to Subversion as a service via the network. I like to prepare for future use for example allowing access from other machines. You can always opt for local file based access, http, https, and svn+ssh (and you will not need to do the steps below).

On Mac, you can have services launched as a permanent process or on demand using launchd – System wide and per-user daemon/agent manager.

In this discussion we will be running svnserve on demand, similar to running services via inetd on Linux.

It is fairly straightforward. You need to create a .plist file similar to the one below. I named mine org.apache.subversion.svnserv.plist

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

| < ?xml version="1.0" encoding="UTF-8"?>

< !DOCTYPE plist PUBLIC "-//Apple Computer//DTD PLIST 1.0//EN"

"http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Debug</key>

<false />

<key>Disabled</key>

<false />

<key>GroupName</key>

<string>staff</string>

<key>Label</key>

<string>org.apache.subversion.svnserv</string>

<key>OnDemand</key>

<true />

<key>Program</key>

<string>/usr/bin/svnserve</string>

<key>ProgramArguments</key>

<array>

<string>svnserve</string>

<string>--inetd</string>

<string>--root=/Users/adyromantika/SVNRepository</string>

</array>

<key>ServiceDescription</key>

<string>SVN Code Version Management</string>

<key>Sockets</key>

</dict><dict>

<key>Listeners</key>

</dict><dict>

<key>SockFamily</key>

<string>IPv4</string>

<key>SockServiceName</key>

<string>svn</string>

<key>SockType</key>

<string>stream</string>

</dict>

<key>Umask</key>

<integer>2</integer>

<key>UserName</key>

<string>adyromantika</string>

<key>inetdCompatibility</key>

<dict>

<key>Wait</key>

<false />

</dict>

</plist> |

< ?xml version="1.0" encoding="UTF-8"?>

< !DOCTYPE plist PUBLIC "-//Apple Computer//DTD PLIST 1.0//EN"

"http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Debug</key>

<false />

<key>Disabled</key>

<false />

<key>GroupName</key>

<string>staff</string>

<key>Label</key>

<string>org.apache.subversion.svnserv</string>

<key>OnDemand</key>

<true />

<key>Program</key>

<string>/usr/bin/svnserve</string>

<key>ProgramArguments</key>

<array>

<string>svnserve</string>

<string>--inetd</string>

<string>--root=/Users/adyromantika/SVNRepository</string>

</array>

<key>ServiceDescription</key>

<string>SVN Code Version Management</string>

<key>Sockets</key>

</dict><dict>

<key>Listeners</key>

</dict><dict>

<key>SockFamily</key>

<string>IPv4</string>

<key>SockServiceName</key>

<string>svn</string>

<key>SockType</key>

<string>stream</string>

</dict>

<key>Umask</key>

<integer>2</integer>

<key>UserName</key>

<string>adyromantika</string>

<key>inetdCompatibility</key>

<dict>

<key>Wait</key>

<false />

</dict>

</plist>

Things that need to be changed:

- Line 17: /usr/bin/svnserve needs to be changed to reflect your local installation.

- Line 22 needs to be changed to use your own repository root. This is not the name of the folder with “conf”, “db”, etc. folders but one folder up, so that your svnserve can serve multiple repositories.

- Line 11 is where you need to put the group name you want the svnserve process to run as. As you can see, I am lazy so I used the default group “staff”.

- Line 41 is where you need to put the username of you want the svnserve process to run as. I used my own user id which is not the best security practice but as I mentioned earlier, I am lazy.

Now that it’s done, copy the file to /Library/LaunchDaemons/ and run the command:

sudo launchctl load /Library/LaunchDaemons/org.apache.subversion.svnserv.plist |

sudo launchctl load /Library/LaunchDaemons/org.apache.subversion.svnserv.plist

You are all set. Please note that since it’s launched on demand, you will not see the svnserve process running unless you are connected to the repository. You can simply use telnet to verify you get some kind of response:

adymac:~ adyromantika$ telnet localhost 3690

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

( success ( 2 2 ( ) ( edit-pipeline svndiff1 absent-entries commit-revprops depth log-revprops partial-replay ) ) ) |

adymac:~ adyromantika$ telnet localhost 3690

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

( success ( 2 2 ( ) ( edit-pipeline svndiff1 absent-entries commit-revprops depth log-revprops partial-replay ) ) )

If you don’t get a response or “Unable to connect to remote host” you may want to check the file /etc/services and see whether these two lines are commented:

svn 3690/udp # Subversion

svn 3690/tcp # Subversion |

svn 3690/udp # Subversion

svn 3690/tcp # Subversion

Good luck. If you are having issues please comment below and I will try my best to help.